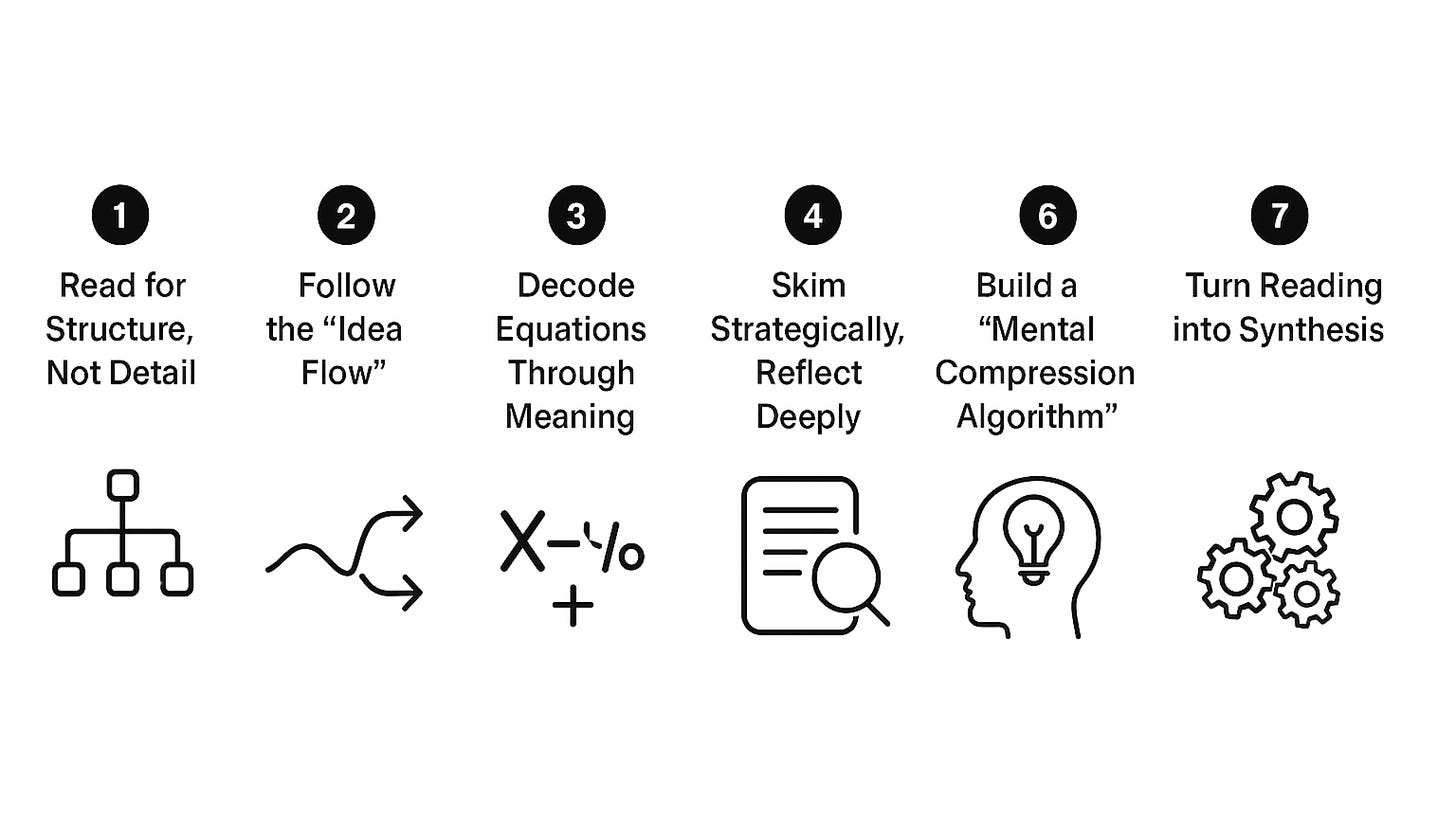

How to Read Machine Learning Papers Fast but Still Learn - The Top 7 Strategies from ICLR Reviewer

Master the art of decoding ML papers—grasp key ideas, skip the fluff, and learn smarter in less time - The top 7 strategies to approach scientific reading from an experienced ICLR reviewer (2025 & 26)

When I first began reviewing papers for ICLR while conducting my own research in quantum machine learning, I was overwhelmed by the number of PDFs—each one dense with equations, acronyms, and claims of “state-of-the-art” breakthroughs. I’d spend hours decoding every detail, only to realize later that I’d missed the point. Over time, through exhaustion and repetition, I discovered a better way: read for structure, not detail; meaning, not math. Once I started treating papers like systems—identifying their symmetry, flow, and core bottleneck—I could extract insights in minutes instead of hours. Reading fast, I learned, isn’t about skipping; it’s about seeing the architecture of ideas beneath the clutter.

Since scientific papers are the heartbeat of machine learning research, each one is a snapshot of human ingenuity, distilled into dense mathematics and terse paragraphs. However, for of us, these papers feel more like encrypted messages than insightful stories. So, how do you absorb the essence of a paper without drowning in its details became a critical challenge to address. Therefore, after years of reading, reviewing, and writing research papers across quantum computing and machine learning—often under intense deadlines like ICLR 2025 and 2026—I’ve developed a structured way to read papers fast while still learning deeply. What separates a casual skimmer from an efficient learner isn’t speed—and here are the strategies.

1. Read for Structure, Not Detail

When you first open a paper, resist the urge to understand everything. Great readers don’t dive into the math right away—they map the terrain.

Start with the abstract, results, and conclusion. These sections tell you the problem, the empirical results, and the claimed contribution. Your first question should be: Why does this paper exist, and how does it matter?

Most papers can be classified into three archetypes:

New idea or architecture (introducing a new model or approach, like the paper, Attention is all you need, an introduction to the transformer model)

Improvement (making something faster, smaller, or robust)

Analysis (understanding why something works, like the paper from OpenAI - Why language models hallucinate)

Identifying which type you’re reading tells you how to read it. A new idea requires conceptual focus; an improvement demands experimental scrutiny; an analysis paper calls for logical dissection.

2. Follow the “Idea Flow”

Think of a paper as a river. The idea begins in the introduction, branches through methodology, and flows into experiments. Don’t get stuck fishing for details—follow the current.

As you read, ask:

What’s the core intuition from the mathematical formulations?

How does it differ from prior work or my current research?

What’s the main bottleneck they’re solving and how exactly?

When I reviewed papers for ICLR, I noticed that the best ones have a narrative thread—a single insight that flows through the equations. The mediocre ones drown readers in math but hide the point. Reading well means extracting that narrative and ignoring the decorative algebra around it.

3. Decode Equations Through Meaning

Mathematics is the language of machine learning or basically all scientific subjects — but it’s also where most painful. The trick is not to compute but to translate.

Ask yourself: What is this equation trying to express conceptually?

For example, when you see a loss function, focus less on the summations and more on what the loss is optimizing for. Is it aligning embeddings? Maximizing margin? Preserving energy? Once you capture that essence, you can reconstruct the math later if needed.

The goal isn’t symbolic memorization—it’s conceptual understanding. The best researchers can explain their equations without writing them down and formulate the model of computation even in natural languages.

4. Skim Strategically, Reflect Deeply

Speed doesn’t come from reading faster—it comes from knowing what to skip.

You can usually skim:

Dataset descriptions (unless the dataset is novel)

Hyperparameter sections (read only if you plan to reproduce)

Related work (read selectively to understand positioning)

But never skim the figures. Good figures often encode intuition that the text fails to convey. I once understood an entire transformer variant purely by studying its architecture diagram before reading the corresponding section.

After skimming, pause. Reflection is the real accelerator. Spend a few minutes writing a summary in your own words—one paragraph that captures the why, how, and so what.

5. Build a “Mental Compression Algorithm”

The human brain is, in essence, a compression system. Efficient readers compress information into reusable mental models.

When you read papers, categorize insights into reusable patterns:

Architecture patterns (e.g., residual connections, attention)

Optimization patterns (e.g., contrastive loss, curriculum learning)

Conceptual patterns (e.g., inductive bias, compositionality)

Soon, new papers stop feeling new—they become variations on familiar motifs. You’ll read faster because you’re no longer learning from scratch; you’re mapping deltas between what you know and what’s new.

6. Read with a Researcher’s Mindset

The purpose of reading isn’t to admire; it’s to engage. Each paper represents a conversation.

As you read, maintain an internal dialogue:

Do I believe this claim or not?

What assumption might break this method?

Could I extend this idea to my own problem or validate my hypothesis?

The difference between reading as a student and reading as a researcher is simple: students seek understanding, researchers seek opportunity.

7. Turn Reading into Synthesis

Learning ends when you close the paper—but mastery begins when you connect it to others.

Create a habit of writing one synthesis paragraph after every few papers, comparing them by:

Problem framing

Key idea(s)

Limitations

Potential combination or new ideas

This transforms reading into meta-learning—understanding how the field itself evolves. Over time, you stop reading papers in isolation and start seeing the grand tapestry of machine learning progress.

The Meta-Truth: Reading Is Thinking

Fast reading isn’t about finishing quickly; it’s about minimizing wasted cognition. The best readers don’t process every word—they extract meaning at the right level of abstraction.

You can read a paper deeply and learn nothing if your mind remains passive. Or you can read ten papers strategically and extract insights that reshape your research direction.

The art of reading ML papers fast but deeply lies in this paradox: the less you try to understand everything, the more you end up understanding what matters.